Server Load Balancing Strategies for High Traffic Sites: A Comprehensive Guide

Delving into the realm of server load balancing strategies for high traffic sites, this guide aims to shed light on the intricacies of optimizing website performance. From distributing traffic effectively to handling sudden spikes, this topic is crucial for maintaining a seamless user experience.

As we navigate through the different types of load balancing algorithms, hardware versus software options, SSL offloading, auto-scaling techniques, geographic strategies, and monitoring tools, you will gain valuable insights into enhancing your website's efficiency.

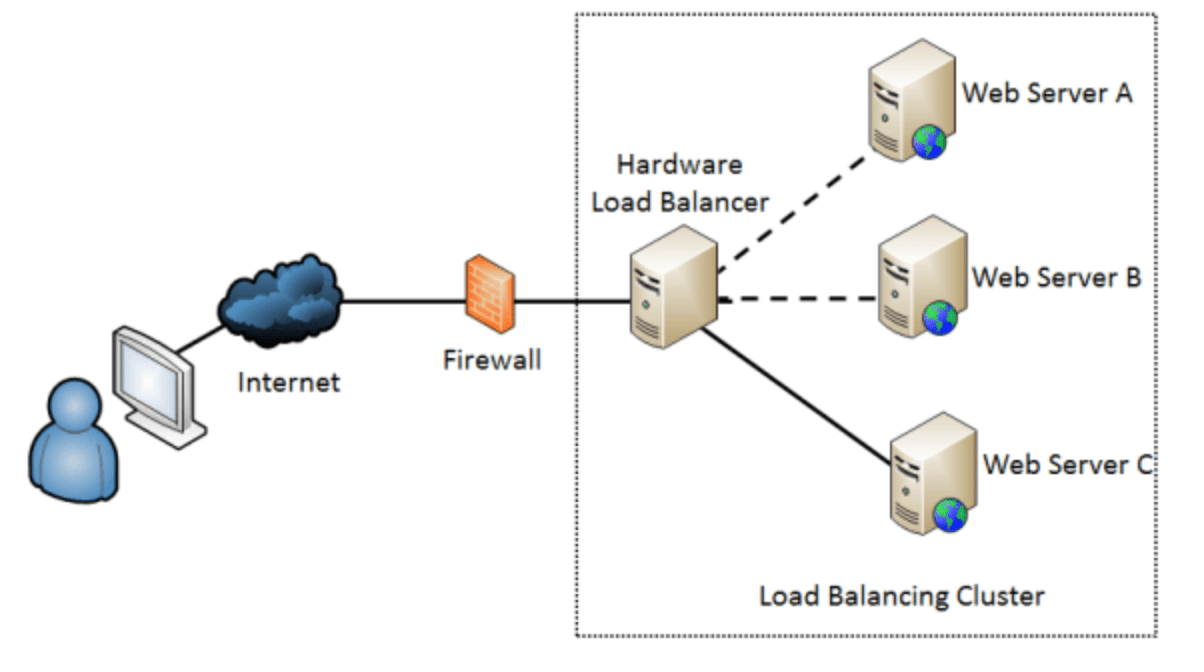

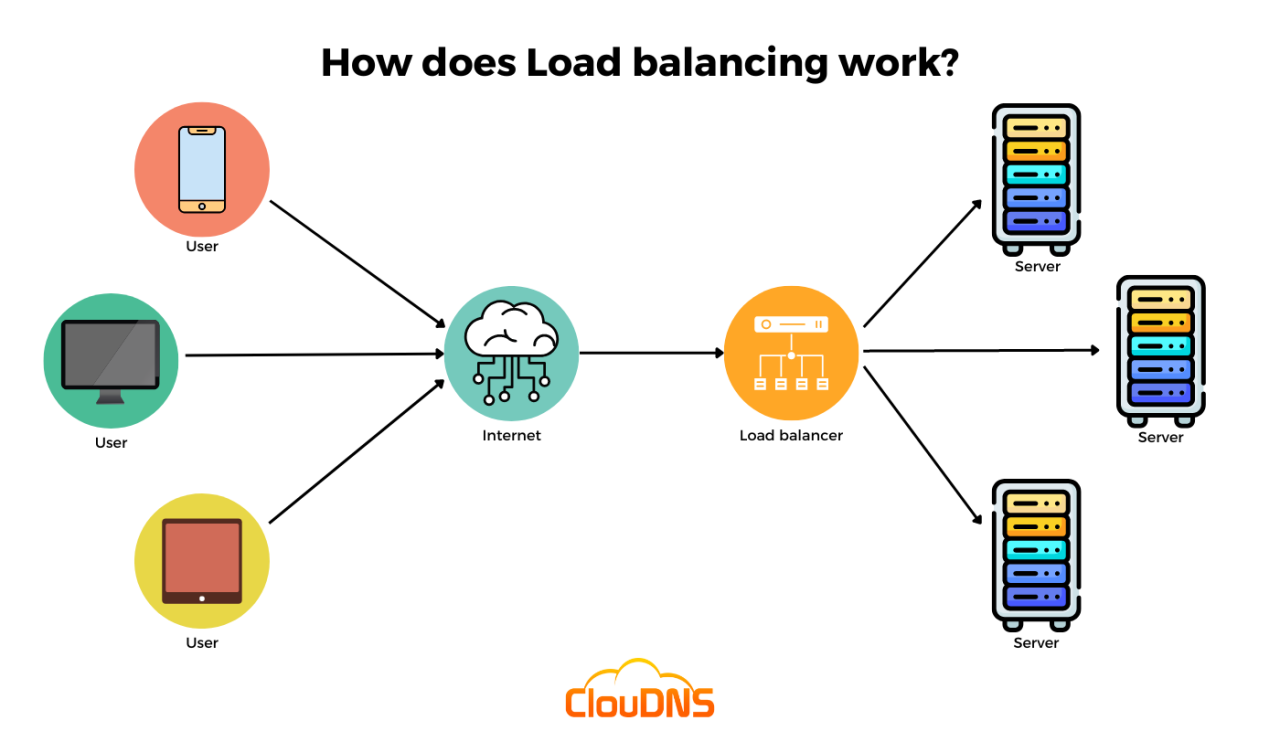

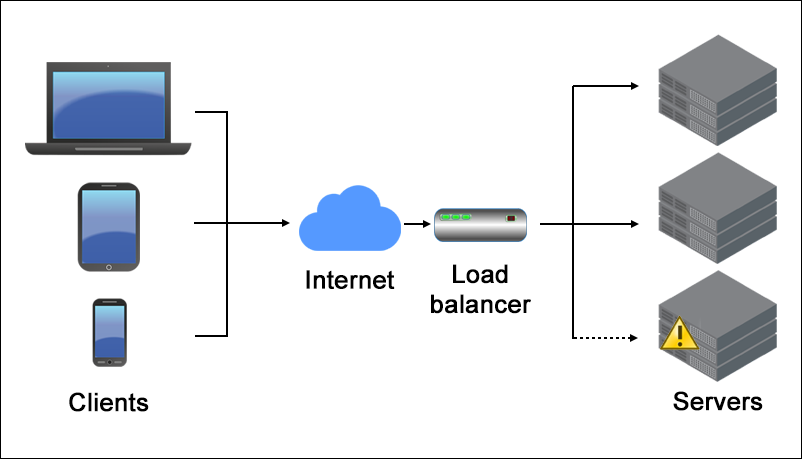

Overview of Server Load Balancing

Server load balancing is a technique used to distribute incoming network traffic across multiple servers. This helps to ensure that no single server becomes overwhelmed with requests, leading to improved performance, availability, and reliability of websites or applications.High traffic sites such as Google, Facebook, Amazon, and Netflix benefit greatly from server load balancing.

These websites receive millions of visitors daily, and distributing the load across multiple servers allows them to handle the high volume of traffic efficiently.The importance of load balancing for high traffic websites cannot be overstated. It helps prevent server overloads, reduces downtime, and ensures a seamless user experience.

By evenly distributing the incoming requests, load balancing helps optimize resource utilization and scalability, enabling websites to handle spikes in traffic without any issues.

Types of Load Balancing Algorithms

Load balancing algorithms play a crucial role in distributing traffic efficiently across servers. Different algorithms have their own unique way of managing incoming requests. Let's explore some common types of load balancing algorithms and how they work.

Round Robin

Round Robin is one of the simplest load balancing algorithms where requests are distributed evenly across servers in a circular manner. Each server takes turns handling incoming requests, ensuring a balanced workload distribution. This algorithm is easy to implement and works well for systems with similar server capacities.

Least Connection

The Least Connection algorithm directs new requests to the server with the fewest active connections at the time. This helps in evenly distributing the load based on the current server's capacity. It is ideal for situations where servers have different capacities or when some servers might be experiencing heavier traffic than others.

IP Hash

IP Hash algorithm uses the client's IP address to determine which server should handle the request. By hashing the IP address, the algorithm consistently sends the client's requests to the same server. This is useful for maintaining session persistence or when dealing with stateful applications.

Real-World Examples

- Round Robin: A small e-commerce website with multiple servers of similar capacities can benefit from Round Robin to evenly distribute incoming traffic.

- Least Connection: A streaming platform may use the Least Connection algorithm to route users to less busy servers to ensure a smooth streaming experience.

- IP Hash: Banking applications that require session persistence can utilize the IP Hash algorithm to maintain user sessions on specific servers for security and consistency.

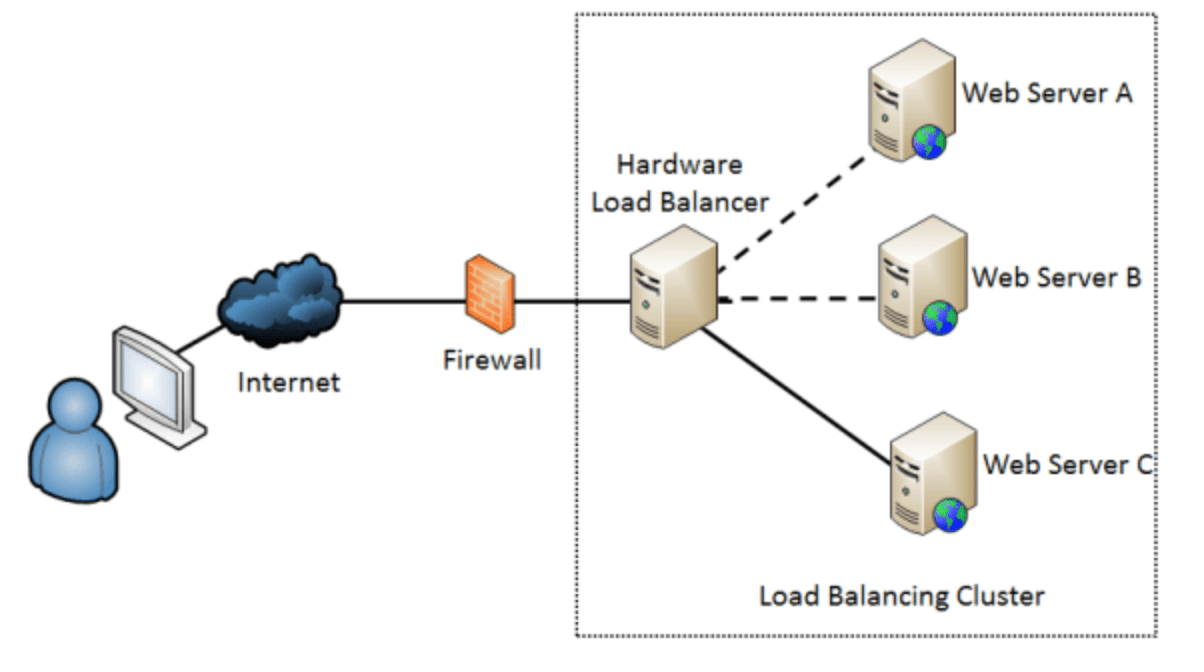

Hardware vs. Software Load Balancers

When it comes to server load balancing, one crucial decision that organizations need to make is choosing between hardware and software load balancers. Each type has its own set of advantages and disadvantages, which can significantly impact the performance of high traffic sites.

Differences Between Hardware and Software Load Balancers

Hardware load balancers are physical devices dedicated solely to load balancing, while software load balancers are programs that can be installed on existing servers. Hardware load balancers typically offer higher performance and reliability due to their specialized hardware components, while software load balancers are more flexible and cost-effective.

Pros and Cons of Using Hardware Load Balancers for High Traffic Sites

- Pros:Hardware load balancers can handle large amounts of traffic efficiently, provide better security features, and offer dedicated support from manufacturers.

- Cons:Hardware load balancers are typically more expensive, require physical space in data centers, and may have limited scalability compared to software load balancers.

Insights into Scalability and Maintenance of Both Types of Load Balancers

Hardware load balancers are often easier to scale horizontally by adding more devices to distribute the load, but they can be challenging to upgrade or replace due to hardware limitations. On the other hand, software load balancers can be easily scaled vertically by upgrading server resources, but they may require more frequent maintenance and software updates.

Importance of SSL Offloading in Load Balancing

SSL offloading plays a crucial role in load balancing for high traffic sites by relieving the burden on backend servers and improving overall performance. Let's delve into the significance of SSL offloading and its benefits in load balancing strategies.

What is SSL Offloading and Its Role

SSL offloading, also known as SSL termination, involves decrypting SSL/TLS encryption at the load balancer before forwarding requests to backend servers. This process helps offload the resource-intensive task of SSL decryption from the servers, allowing them to focus on other essential tasks.

By handling SSL encryption and decryption, load balancers can improve response times, reduce server load, and enhance overall system performance.

Benefits of Offloading SSL

- Improved Performance: SSL offloading reduces the processing load on backend servers, leading to faster response times and improved user experience.

- Scalability: By offloading SSL to load balancers, you can easily scale your infrastructure to handle increasing traffic without overburdening servers.

- Security: Load balancers can implement security measures, such as SSL termination, to protect sensitive data and ensure secure communication between clients and servers.

- Cost-Effectiveness: Offloading SSL to load balancers can help reduce the need for expensive SSL certificates on each server, resulting in cost savings.

Best Practices for Implementing SSL Offloading

- Use Strong Encryption: Ensure that load balancers support up-to-date SSL/TLS protocols and use strong encryption algorithms to maintain security.

- Implement Certificate Management: Regularly update SSL certificates on load balancers and follow best practices for certificate management to prevent security vulnerabilities.

- Enable End-to-End Encryption: While offloading SSL at the load balancer, ensure that end-to-end encryption is maintained to secure data transmission across the network.

- Monitor Performance: Monitor the performance of load balancers handling SSL offloading to identify any bottlenecks or issues that may impact system performance.

Auto-Scaling Techniques for Load Balancers

Auto-scaling is a crucial feature in managing sudden spikes in website traffic. It allows load balancers to automatically adjust the number of servers based on current demand, ensuring optimal performance and preventing downtime.

Configuring Load Balancers for Auto-Scaling

Load balancers can be configured to auto-scale by setting up rules based on traffic patterns. For example, if the number of requests exceeds a certain threshold, additional servers can be automatically spun up to handle the load. Similarly, when traffic decreases, unnecessary servers can be shut down to save resources.

- Utilize tools like Amazon EC2 Auto Scaling or Google Cloud Autoscaler to automate the scaling process based on predefined metrics.

- Implement dynamic scaling policies that consider factors like CPU utilization, memory usage, and network traffic to make informed scaling decisions.

- Regularly monitor server performance and adjust auto-scaling configurations as needed to ensure efficient resource allocation.

Geographic Load Balancing Strategies

Geographic load balancing involves distributing incoming traffic to different servers based on the geographical location of the user. This strategy helps improve performance, reduce latency, and provide a better user experience.

Benefits of Geographic Load Balancing

- Improved Performance: By routing users to the nearest server, geographic load balancing reduces latency and improves response times.

- Redundancy and Reliability: Distributing traffic across multiple geographical locations enhances redundancy and ensures high availability in case of server failures.

- Compliance with Data Regulations: Geographic load balancing helps organizations comply with data privacy regulations by ensuring data is processed in the appropriate geographical location.

Challenges and Considerations

- Complexity: Implementing geographic load balancing requires a thorough understanding of network topology and may involve additional configuration and monitoring.

- Data Synchronization: Ensuring data consistency across geographically distributed servers can be challenging and may require synchronization mechanisms.

- Cost: Setting up and maintaining geographically dispersed servers can be costlier than centralized server setups.

Monitoring and Analytics for Load Balancers

Monitoring and analytics play a crucial role in optimizing the performance of load balancers, especially for high-traffic sites. By keeping a close eye on key metrics and utilizing the right tools, organizations can ensure efficient distribution of traffic and seamless operation of their systems.

Importance of Monitoring and Analytics

Monitoring and analytics provide valuable insights into the health and efficiency of load balancers. By tracking various metrics, such as server response times, error rates, traffic distribution, and resource utilization, IT teams can identify bottlenecks, anticipate potential issues, and make data-driven decisions to enhance performance.

- Server Response Times: Monitoring the response times of servers helps in identifying slow-performing servers and optimizing load distribution.

- Error Rates: Tracking error rates can highlight issues such as server overload or misconfigurations that need immediate attention.

- Traffic Distribution: Analyzing traffic patterns helps in adjusting load balancing algorithms to evenly distribute incoming requests.

- Resource Utilization: Monitoring resource utilization provides insights into server capacity and helps in scaling resources as needed.

Tools and Techniques for Monitoring and Analyzing

There are various tools and techniques available for monitoring and analyzing load balancer performance. Some popular options include:

Zabbix, Nagios, Prometheus, Grafana, ELK stack, New Relic, Datadog, and AWS CloudWatch.

- Zabbix:Offers monitoring capabilities for network devices, servers, and applications, providing real-time insights into performance metrics.

- Prometheus and Grafana:A powerful combination for metric collection and visualization, enabling users to create custom dashboards for monitoring load balancer performance.

- ELK Stack:Elasticsearch, Logstash, and Kibana together offer log analysis and visualization capabilities, helping in identifying trends and anomalies in load balancer logs.

- New Relic and Datadog:Provide comprehensive monitoring solutions with alerting features, allowing proactive management of load balancer performance.

- AWS CloudWatch:Amazon's monitoring service offers insights into AWS resources, including load balancers, to track performance and troubleshoot issues.

Final Wrap-Up

In conclusion, mastering server load balancing strategies is paramount for ensuring your high traffic site operates smoothly and efficiently. By implementing the right techniques and staying abreast of industry best practices, you can effectively manage traffic surges and deliver a seamless user experience to your visitors.

Popular Questions

What is SSL offloading and why is it important?

SSL offloading involves decrypting SSL traffic at the load balancer before forwarding it to backend servers, reducing their workload and enhancing performance.

How does auto-scaling benefit load balancers?

Auto-scaling allows load balancers to dynamically adjust their capacity based on traffic demands, ensuring optimal performance during sudden spikes.

What are the key metrics to track for load balancers handling high traffic?

Key metrics include server response time, error rates, throughput, and server health to ensure efficient load balancing under high traffic conditions.